Fancy footwork, feedback and fun!

9 Nov

I’ve been busy in October helping colleagues John Unsworth recording a brief vignette about devising learning and teaching strategies for a project he’s doing for the British Council in the Ukraine, and for Santanu Vasant (with Kay Sambell) on changing assessment and feedback post-Covid19 for a new blog series he and colleagues are preparing. You might like to see incidentally our article on the changing landscape of assessment, translated into Spanish (thanks Ceridwen Coulby of Liverpool University, who is going to use it for a British Council project in Peru). You can find it here: The-changing-landscape-I-June-Espanol.docx (681 downloads)

I’ve also been continuing my project with UCLAN on enhancing the student experience plus my work with Galway University supporting their academic promotions panel.

But even in my leisure time a pedagogic professor’s got to pedagogically profess! So here for your delectation is my latest post on what we can learn from the UK show ‘Strictly Come Dancing’ about assessment and feedback: I hope it doesn’t destroy your weekend nights!

What can Strictly tell us about assessment and feedback in universities?

I used to be a bit of a TV snob before lockdown, telling friends I rarely watched it because I was too busy (other than CBeebies with the grandchildren and the occasional police drama set in the North East, which is where I live). Covid-19 has dramatically changed my habits though, and to cope with the fear and uncertainty of the pandemic, Phil and I have decided to throw ourselves into watching the full season of Strictly Come Dancing. Decades ago I loved to ballroom dance myself, especially when expert uncles guided me round the floor, and now I adore the costumes, the glitter and the pizzazz, but most of all the ability to shut off for 90mins at a time from the gruesomeness of our current situation. What I hadn’t expected was how much my day job as an educational developer and pedagogical consultant with expertise in assessment and feedback is being informed by what I thought was purely a leisure pursuit!

This is an ongoing process (we are only part-way through the series) so I imagine I will have more to say later, and no doubt fellow experts and Strictly fans will have plenty to add! But here are my initial thoughts.

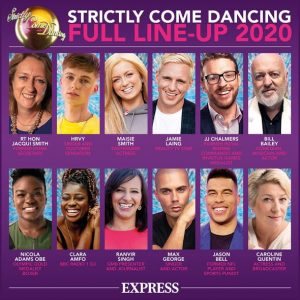

Who gets on to the show is a risky business, just like university admissions. Sometimes we take a risk on who to enrol, hoping they might improve from an unpromising start and the same is true of contestants (who would have thought Bill Bailey would do so well?). We are all too quick to judge what people can or can’t do, and so who is permitted to engage is often limited by the smallness of our minds.

The assessment judgment process can be grim for learners: watching the contestants waiting to hear if they will come back to dance the next week, we can see how badly they fear a negative judgment (xx I’m looking at you!) , much like our students for whom university degrees are likely to have much more long-term effects on their lives than a game show, in whose faces we can see the emotional impact of assessment (Clegg in Bryan and Clegg, 2019) writ large.

Criteria: the judges are giving scores based on a significant level of knowledge about technical aspects of dancing (‘connoisseurship’, Orr, 2010, ‘We try to merge our own experience with the objectivity of the criteria’) which is not available to non-specialists. They tend to use very different criteria about the dancers, such as being physically attractive or behaving in ways that are surprisingly beyond normal expectations rather than technical competence, which is why layperson’s judgment tends to differ so greatly from the judges and why Phil and I often yell at the judges when they get it wrong by our reckoning. What is also scary is how often we are able to jump to an impression mark, based on nothing more than gut feeling, and hold to it despite contrary expert views (remind you of anything?). And is there a halo effect which stems from expectations derived from previous performance (“but she did brilliantly last time, I can’t believe she got such low marks this time”!)? And depending on which judge delivers their verdict first, do other judges adjust their marks accordingly as certainly happens in university double marking when the first marker is much more eminent and experienced than the second (which is why each should mark independently and then compare scores subsequently).

Hidden agendas: a lot rests on the choreography the professional has chosen to showcase the contestant’s skills and what they choose can impact on how well the contestant performs: (not really much chachacha in your chachacha!). Costumes, hair and make up often impact highly on scores, which are all aspects of performance beyond the dancers’ control, which replicates some aspects of university assessment.

It’s also the case that some features of the competition are covert rather than explicit: we don’t know how much the public vote contributes to the decisions about who gets into the dance-off and this may make it seem unfair: in universities we argue that students are less likely to complain about fairness if the process is open and explicit (Sambell et al, 1997).

Peer assessment: the phone-in votes in some ways mirror some aspects of peer assessment: inexperienced peers sometimes tend to vote high to support friends and that is emulated in the way phone-in votes are sometimes cast on the basis of factors other than dancing skills. Over the series it’s probably we get better at making more criterion-based evaluations, but I guess sympathy for the struggling underdog and fellow feeling will continue to kick in (just like in HE assessment).

Feedback: Craig’s feedback is brutal (couched in what David Boud might call ‘final language’ giving learners nowhere to go) but informative, and Nicola was able to avoid leaving the show because overnight she learned from his formative feedback and upped her game in the Dance Off. Motsi’s feedback is less helpful: it’s largely encouraging but not that informative, but you can see that her positivity is encouraging for contestants getting disappointing scores from the others. Shirley’s can be seen as the Goldilocks evaluations: not predictably and melodramatically fierce as Craig’s, but equally based on rigorous standards, and with the warmth and empathy that Motsi shows. Arguably all university assessors should aim to be like Shirley?

What needs to happen if participants are to avoid devastating disappointment is that they need progressively to develop inner feedback (Nicol, 2020) whereby they are better able to understand what good quality performances comprise (Sadler, 2010), having learned (if they are wise!) from watching the judges’ critiques of their peers, and hence are better able to make evaluative judgments of their own dancing.

So sorry to readers of this blog if I have ruined the escapism of your Weekends by this intrusion of pedagogic perambulations: if you want to add your thoughts, why not tweet in response to my pinned tweet @ProfSallyBrown?

References

Bryan, C. and Clegg, K. eds., 2019. Innovative assessment in higher education: A handbook for academic practitioners. Routledge.

Nicol, D., 2020. The power of internal feedback: exploiting natural comparison processes. Assessment & Evaluation in Higher Education, pp.1-23.

Orr, S., 2010. We kind of try to merge our own experience with the objectivity of the criteria: The role of connoisseurship and tacit practice in undergraduate fine art assessment. Art, Design & Communication in Higher Education, 9(1), pp.5-19.

Sadler, D. R., 2010, Beyond feedback: Developing student capability in complex appraisal. Assessment & Evaluation in Higher Education, 35(5), 535-550.

Sambell, K., Brown, S. and McDowell, L., 1997. “But Is It Fair?”: An Exploratory Study of Student Perceptions of the Consequential Validity of Assessment. Studies in educational evaluation, 23(4), pp.349-71.